TechTakes

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

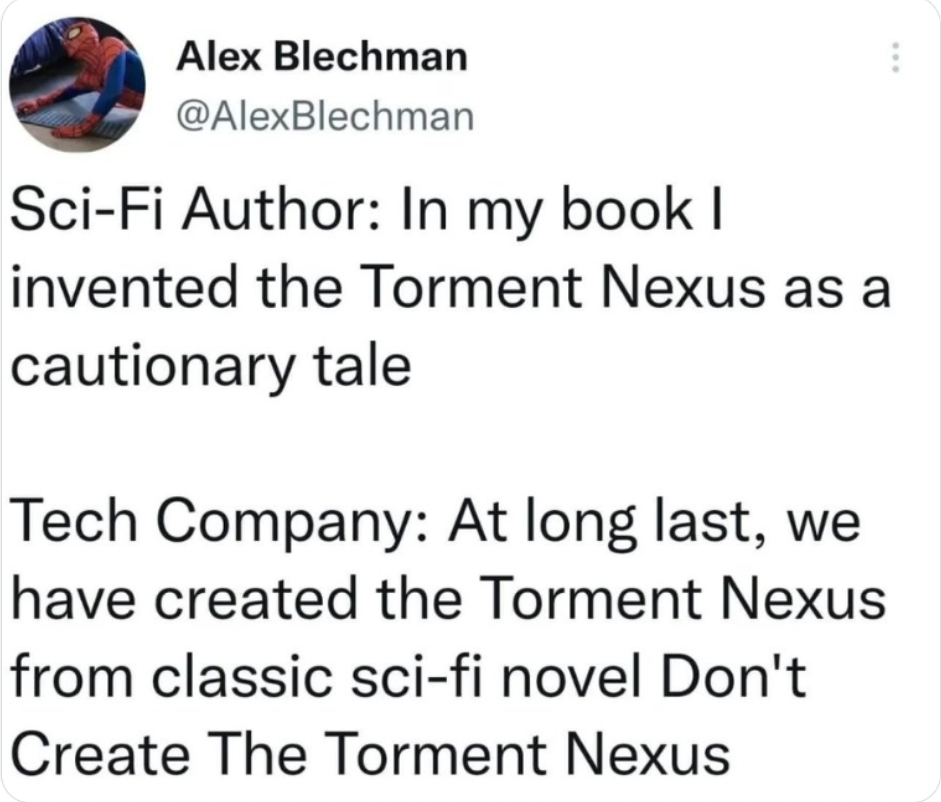

Should be the header image or sidebar or whatever for this lemmy.

This comment from the HN discussion is too funny

https://news.ycombinator.com/item?id=37725746

The number of AI safety sessions I’ve joined where the speakers have no real AI experience talking about potentially bad futures, based on zero CS experience and little ‘evidence’ beyond existing sci-fi books and anecdotes, have left me very jaded on the subject as a ‘discipline’.

"who needs to listen to the poet/writers/painters/sculptors/.... anyway? they're just there to make things that look good in my palazzo garden!"

Yes, there is a lot of bunk AI safety discussions. But there are legitimate concerns as well.

Hey, don't worry, someone's standing up for--

AI is close to human level.

Uh, never mind

~~Brawndo~~ Blockchain has got what ~~plants~~ LLMs crave, it's got ~~electrolytes~~ ledgers.

Thinking otherwise is dumb (and, remember, that’s not you!).

Guy goes through so much effort to mash AI and blockchain together and he decides to be lazy here lol

Also, if believing this stuff is for smart people then just call me a fucking idiot.

I actually get the impression that was letting the mask slip for a moment: “remember, they must at all times think you’re clever! Never let them think otherwise!”

this thing was a fucking slog of bad ideas and annoying memes so I skimmed it, but other than the obvious (blockchains are way too inefficient for any of this to come even close to working), did the author even mention validation? cause if I’m contributing my own data and my own tags then I’m definitely going to either insert a whole bunch of nonsense into the model to get paid, or use an adversarial model to generate malicious data and weights and use that to break the model. the way crypto shitheads fix this is with a centralized oracle, which means all of this is just a pointless exercise in combining the two most environmentally wasteful types of grift tech ever invented

Considering the mix of bad ideas, I suppose the AI model will check itself. Maybe by mining.

This article is an incredibly deep mine of bad takes, wow

Let’s just build a data intensive application on a foundation where we have none of that data nearby to start! Let’s forget about all other prior distribution models! Let’s make faulty rationalists* and leaps of assumptions!

And then you click through the profile:

I’ve spent a decade working in fintech at AIG, the Commonwealth Bank of Australia, Goldman Sachs, Fast, and Affirm in roles spanning data science, machine learning, software, data engineering, credit, and fraud.

Ah, he’s angling to get a priesthood role if this new faith thing happens. Got it.

*e: this was meant to be “rationalisations” and then my phone keyboard did a dumb. I’m gonna leave it that way because it’s funnier

I don't care what bad tech bingo card you're using, this has to be bingo.

Get pennies for enabling the systems that will put you out of work. Sounds like a great deal!

I still don't understand why these people felt that art was something that had to be automated. I suppose people must have felt the same way when the printing press was invented, but while AI is quicker for the end user it requires significantly more in terms of energy and resources.

If you're into blockchain, AI is somewhere where blockchain would obviously be a huge boon. It's only in the real world where it's a laughable combination.

After going in suspicious, this actually sounds like a pretty decent idea.

The technology isn't stopping or going away any more than the cotton gin did. May as well put control in as many hands as possible. The alternative is putting it under the sole control of a few megacorps, which seems worse. Is there another option I'm not seeing?

no thx

Yeah seriously, you should look into VC work as your next career. You have that rare blend of wilful blindness and optimistic gullibility which seem to be job requirements there, you’ll do great!

Not using blockchains, for a start. Blockchains centralise by design, because of economies of scale.

Everyone puts legal limits in place to prevent LLMs from ingesting their content, the ones who break those limits are prosecuted to the full extent of the law, the whole thing collapses in a downward spiral, and everyone pretends it never happened.

Well, a man can dream.

People don't have data, they have blog posts, vacation photos, and text messages. Talking about personal data as an abstract thing leads to error.