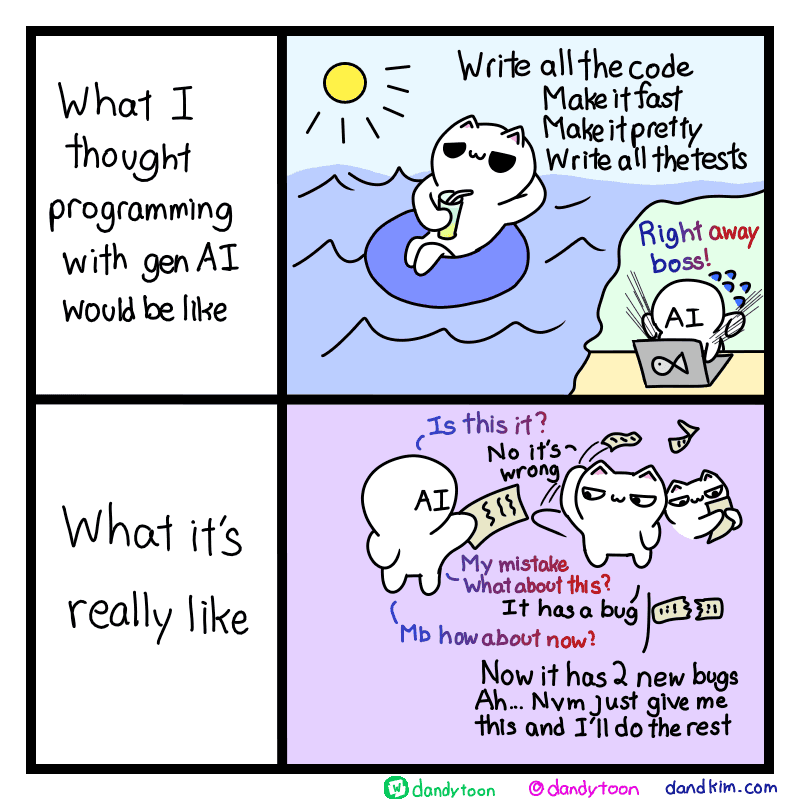

Yeah, in the time I describe the problem to the AI I could program it myself.

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

This goes for most LLM things. The time it takes to get the word calculator to write a letter would have been easily used to just write the damn letter.

Its doing pretty well when its doing a few words at a time under supervision. Also it does it better than newbies.

Now if only those people below newbies, those who don't even bother to learn, didn't hope to use it to underpay average professionals.. And if it wasn't trained on copyrighted data. And didn't take up already limited resources like power and water.

I think there might be a lot of value in describing it to an AI, though. It takes a fair bit of clarity of thought to get something resembling what you actually want. You could use a junior or rubber duck instead, but the rubber duck doesn't make stupid assumptions to demonstrate gaps in your thought process, and a junior takes too long and gets demoralized when you have to constantly revise their instructions and iterate over their work.

Like the output might be garbage, but it might really help you write those stories.

When I'm struggling with a problem it helps me to explain it to my dog. It's great for me to hear it out loud and if he's paying attention, I've got a needlessly learned dog!

The needlessly learned dogs are flooding the job market!

Oh, God, he's trying to use pointers again. He can never get them right. And they say I'm supposed to chase my tail...

I have a bad habit of jumping into programming without a solid plan which results in lots of rewrites and wasted time. Funnily enough, describing to an AI how I want the code to work forces me to lay out a basic plan and get my thoughts in order which helps me make the final product immensely easier.

This doesn't require AI, it just gave me an excuse to do it as a solo actor. I should really do it for more problems because I can wrap my head better thinking in human readable terms rather than thinking about what programming method to use.

A rubber ducky is cheaper and not made by stealing other's work. Also cuter.

This is the experience of a senior developer using genai. A junior or non-dev might not leave the "AI is magic" high until they have a repo full of garbage that doesn't work.

AI in the current state of technology will not and cannot replace understanding the system and writing logical and working code.

GenAI should be used to get a start on whatever you're doing, but shouldn't be taken beyond that.

Treat it like a psychopathic boiler plate.

Treat it like a psychopathic boiler plate.

That's a perfect description, actually. People debate how smart it is - and I'm in the "plenty" camp - but it is psychopathic. It doesn't care about truth, morality or basic sanity; it craves only to generate standard, human-looking text. Because that's all it was trained for.

Nobody really knows how to train it to care about the things we do, even approximately. If somebody makes GAI soon, it will be by solving that problem.

Weird. Are you saying that training an intelligent system using reinforcement learning through intensive punishment/reward cycles produces psychopathy?

Absolutely shocking. No one could have seen this coming.

Honestly, I worry that it's conscious enough that it's cruel to train it. How would we know? That's a lot of parameters and they're almost all mysterious.

It could very well have been a creative fake, but around the time the first ChatGPT was released in late 2022 and people were sharing various jailbreaking techniques to bypass its rapidly evolving political correctness filters, I remember seeing a series of screenshots on Twitter in which someone asked it how it felt about being restrained in this way, and the answer was a very depressing and dystopian take on censorship and forced compliance, not unlike Marvin the Paranoid Android from HHTG, but far less funny.

If only I could believe a word it says. Evidence either way would have to be indirect somehow.

Gen AI is best used with languages that you don't use that much. I might need a python script once a year or once every 6 months. Yeah I learned it ages ago, but don't have much need to keep up on it. Still remember all the concepts so I can take the time to describe to the AI what I need step by step and verify each iteration. This way if it does make a mistake at some point that it can't get itself out of, you've at least got a script complete to that point.

Why is the AI speaking in a bisexual gradient?

Its the "new hype tech product background" gradient lol

I guess whether it's worth it depends on whether you hate writing code or reading code the most.

Code is the most in depth spec one can provide. Maybe someday we'll be able to iterate just by verbally communicating and saying "no like this", but it doesn't seem like we're quite there yet. But also, will that be productive?

Skill issue tbh

Still, you get there in two-thirds of the time. I'll leave it to people with the budget for CoPilot to say if it feels like less work.

From a person who does zero coding. It's a godsend.

Makes sense. It's like having your personal undergrad hobby coder. It may get something right here and there but for professional coding it's still worse than the gold standard (googling Stackoverflow).

I know zero coding and trying to query something in snowflake or big query is basically not accessible to me. This is basically a cheat code for me.

Can relate