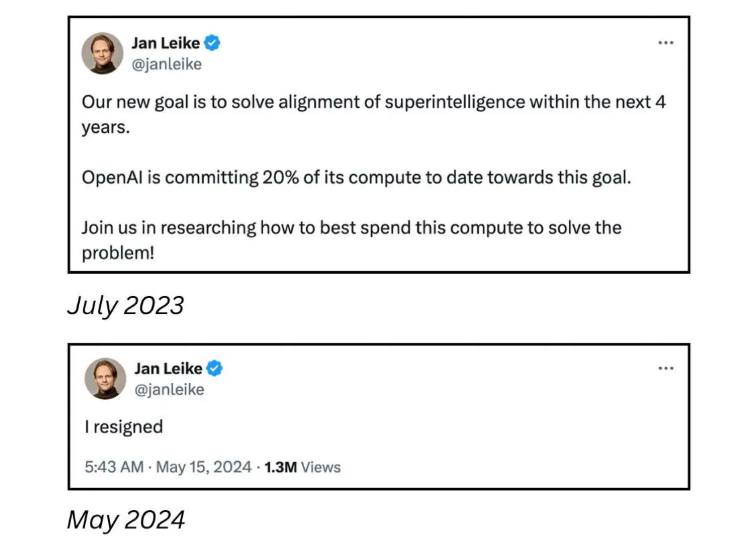

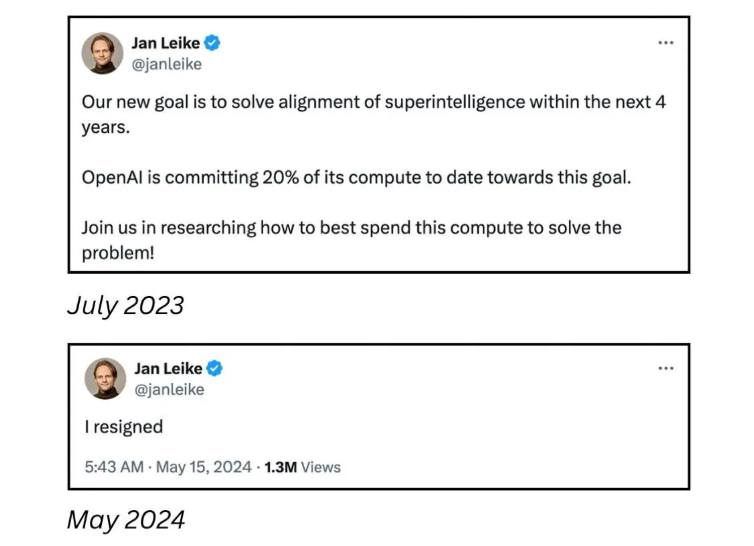

this post was submitted on 15 May 2024

89 points (100.0% liked)

SneerClub

38 readers

17 users here now

Hurling ordure at the TREACLES, especially those closely related to LessWrong.

AI-Industrial-Complex grift is fine as long as it sufficiently relates to the AI doom from the TREACLES. (Though TechTakes may be more suitable.)

This is sneer club, not debate club. Unless it's amusing debate.

[Especially don't debate the race scientists, if any sneak in - we ban and delete them as unsuitable for the server.]

See our twin at Reddit

founded 2 years ago

MODERATORS