Reddthat Announcements

Main Announcements related to Reddthat.

- For all support relating to Reddthat, please go to !community@reddthat.com

Hello Reddthat,

Another month has flown by. It is now "Spooky Month". That time of year where you can buy copious amounts of chocolate and not get eyed off by the checkout clerks...

Lots has been happening at Reddthat over this last month. The biggest one being the new admin team!

New Administrators

Since our last update we have welcomed 4 new Administrators to our team! You might have seen them around answering questions, or posting their regular content. Mostly we have been working behind the scenes moderating, planning and streamlining a few this that 1 person cannot do all on their own.

I'd like to say: Thank you so much for helping us all out and hope you will continue contributing your time to Reddthat.

Kbin federated issues

Most of the time of our newly appointed admins have been taken up with moderating our federated space rather than the content on reddthat! As discussed in our main post (over here) https://reddthat.com/post/6334756 . We have chosen to remove specific kbin communities where the majority of spam was coming from. This was a tough decision but an unfortunate and necessary one.

We hope that it has not caused an issue for anyone. In the future once the relevant code has been updated and kbin federates their moderation actions, we will gladly 'unblock' those communities.

Funding Update & Future Server(s)

We are currently trucking along with 2 new recurring donators in our September month, bringing us to 34 unique donators! I have updated our donation and funding post with the names of our recurring donators.

With the upcoming changes with Lemmy and Pictrs it will give us the opportunity to investigate into being highly-available, where we run 2 sets of everything on 2 separate servers. Or scale out our picture services to allow for bigger pictures, and faster upload response times. Big times ahead regardless.

Upcoming Lemmy v0.19 Breaking Changes

Acouple of serious changes are coming in v0.19 that people need to be aware of. 2FA Reset and Instance level blocking per user

All 2FA will be effectivly nuked

This is a Lemmy specific issue where two factor authentication (2FA) has been inconsistent for a while. This will allow people who have 2FA and cannot get access to their account to login again. Unfortunately if someone outthere is successfully using 2FA then you will also need to go though the setup process again.

Instance blocks for users.

YaY!

No longer do you have to wait for the admins to defederate, now you will be able to block a whole instance from appearing in your feeds and comment threads. If you don't like a specific instance, you will be able to "defederate" yourself!

How this will work on the client side (via the web or applications) I am not too certain, but it is coming!

Thankyou

Thankyou again for all our amazing users and I hope you keep enjoying your Lemmy experiences!

Cheers

Tiff,

On behalf of the Reddthat Admin Team.

Hello Reddthat,

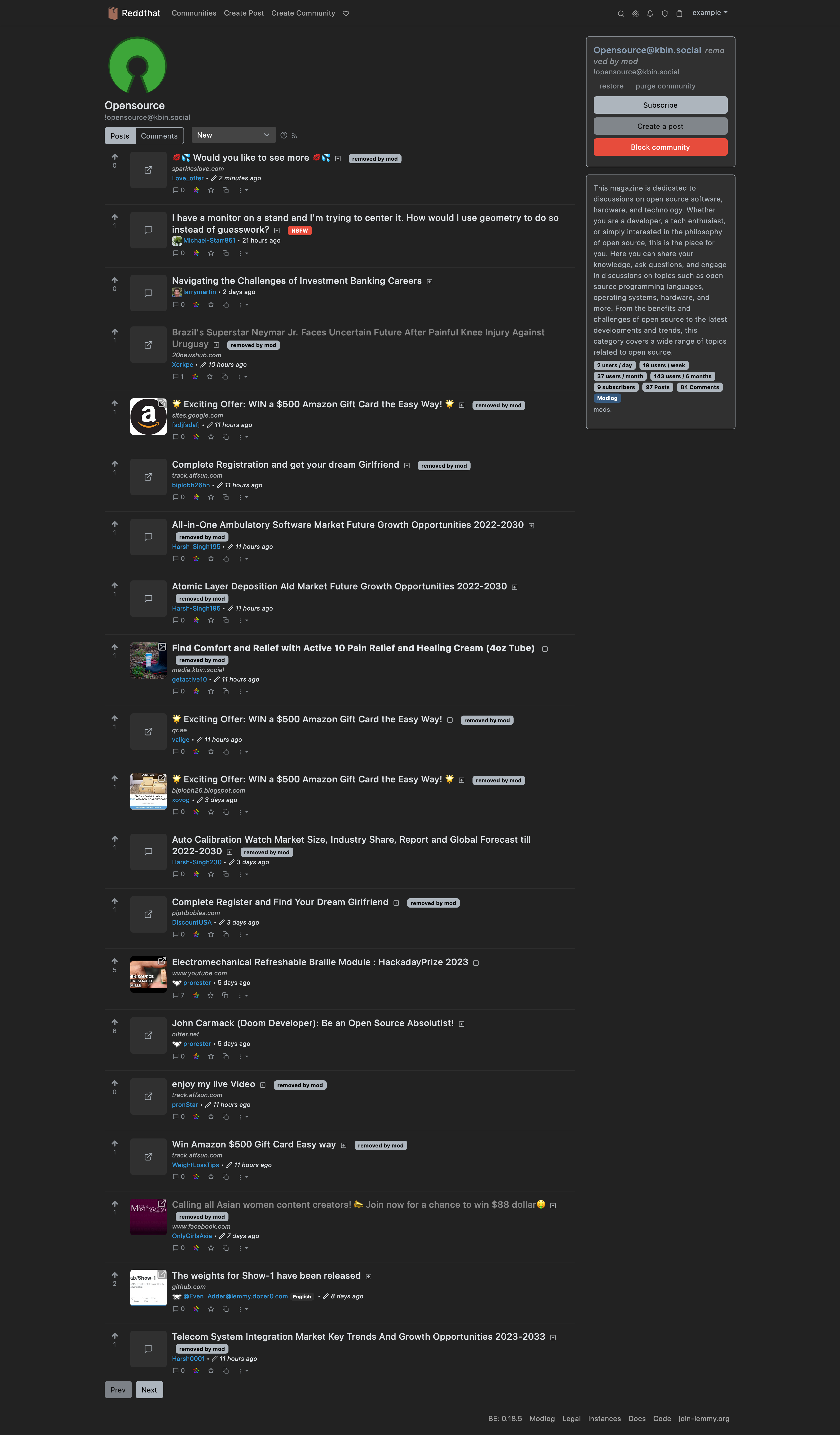

Similar to other Lemmy instances, we're facing significant amounts of spam originating from kbin.social users, mostly in kbin.social communities, or as kbin calls them, magazines.

Unfortunately, there are currently significant issues with the moderation of this spam.

While removal of spam in communities on other Lemmy instances (usually) federates to us and cleans it up, removal of spam in kbin magazines, such as those on kbin.social, is not currently properly federated to Lemmy instances.

In the last couple days, we've received an increased number of reports of spam in kbin.social magazines, of which a good chunk had already been removed on kbin.social, but these removals never federated to us.

While these reports are typically handled in a timely manner by our Reddthat Admin Team, as reports are also sent to the reporter's instance admins, we've done a more in-depth review of content in these kbin.social magazines.

Just today, we've banned and removed content from more than 50 kbin.social users, who had posted spam to kbin.social magazines within the last month.

Several other larger Lemmy instances, such as lemmy.world, lemmy.zip, and programming.dev have already decided to remove selected kbin.social magazines from their instances to deal with this.

As we also don't want to exclude interactions with other kbin users, we decided to only remove selected kbin.social magazines from Reddthat, with the intention to restore them once federation works properly.

By only removing communities with elevated spam volumes, this will not affect interactions between Lemmy users and kbin users outside of kbin magazines. kbin users are still able to participate in Reddthat and other Lemmy communities.

For now, the following kbin magazines have been removed from Reddthat:

[!random@kbin.social](/c/random@kbin.social)[!internet@kbin.social](/c/internet@kbin.social)[!fediverse@kbin.social](/c/fediverse@kbin.social)[!programming@kbin.social](/c/programming@kbin.social)[!science@kbin.social](/c/science@kbin.social)[!opensource@kbin.social](/c/opensource@kbin.social)

To get an idea of the spam to legitimate content ratio, here's some screenshots of posts sorted by New:

All the removed by mod posts mean that the content was removed by Reddthat admins, as the removals on kbin.social did not find their way to us.

If you encounter spam, please keep reporting it, so community mods and we admins can keep Reddthat clean.

If you're interested in the technical parts, you can find the associated kbin issue on Codeberg.

Regards,

example and the Reddthat Admin Team

TLDR

Due to spam and technical issues with the federation of spam removal from kbin, we've decided to remove selected kbin.social magazines (communities) until the situation improves.

Hello Reddthat,

It has been a while since I posted a general update, so here we go!

Slight content warning regarding this post. If you don't want to read depressing things, skip to # On a lighter note.

The week of issues

If you are not aware, the last week(s) or so has been a tough one for me & all other Lemmy admins. With recent attacks of users posting CSAM, a lot of us admins have been feeling a wealth of pressure.

Starting to be familiar with the local laws surrounding our instances as well as being completely underwhelmed with the Lemmy admin tools. All of these issues are a first for Lemmy and the tools do not exist in a way that enable us to respond fast enough or in a way in which content moderation can be effective.

If it happens on another instance it still affects everyone, as it effects every fediverse instance that you federate against.

As this made "Lemmy" news it resulted in Reddthat users asking valid questions on our !community@reddthat.com. I responded with the plan I have if we end up getting targeted for CSAM. At this point in time a user chose violence upon waking up and started to antagonise me for having a plan. This user is a known Lemmy Administrator of another instance. They instigated a GDPR request for their information. While I have no issue with their GDPR instigation, I have an issue with them being antagonising, abusive, and

Alternatively, stop attracting attention you fuckhead; a spineless admin saying “I’m Batman” is exactly how you get people to mess with you

Unfortunately they chose to create multiple accounts to abuse us further. This is not conduct that is conducive for conversation nor is it conduct fitting of a Lemmy admin either. As a result we have now de-federated from the instance under the control of that admin.

To combat bot signups, we have moved to using the registration approval system. The benefit to this system not only will ensure that we can hopefully weed out the bots and people who mean to do us harm, but we can use it to put the ideals and benefits of Reddthat in the forefront of the user.

This will hopefully help them understand what is and isn't acceptable conduct while they are on Reddthat.

On a lighter note

Stability

Server stability has been fixed by our hosting provider and a benefit is that we've been migrated to newer infrastructure. What this does for our vCPU is yet to be determined but we have benefited and have confirmed our status monitoring service (over here) is working perfectly and is detecting and alerting me.

Funding

Donations on a monthly basis are now completely covering our monthly costs! I can't thank you enough for these as it really does help keep the lights on.

In August we had 4 new donators, all recurring as well! Thank you so much!

Merch / Site Redesign

I would like to offer merchandise for those who want a hoody, mouse pad, stickers, etc.

Unfortunately I am a backend person, not a graphics designer. Thus if anyone would like to make some designs please let me know in the comments below. 🧡 An example:

I am thinking we need at least 3+ design choices:

- The Logo itself (Sticker/Phone/Magnet)

- Logo with Reddthat text

- Logo with

Where You've Truly 'ReddThat'! - Something cool that you would want on a hoody. <- Very important!

I've managed to find some on-demand printing services which will allow you to purchase directly through them and have it shipped directly from a local-ish printing facilities.

The goal is to reduce the time it takes you to get your merch, reduce the costs of shipping and hopefully ensure I know as little as possible about you as I can. If all goes to plan, I won't know about your addresses / payments / etc and everyone can have some cool merch!

New Admins

To help combat the spamming issue, the increase of reports and the new approval system I am looking for some new administrators to join the team.

While I do not expect you to do everything I do, an hour or two a day in your respective timezone, which amounts to randomly opening reddthat a couple times a day. Checking in to confirm everything is still hunky-dory is probably the minimum.

If you would like to apply to being an Admin to help us all out, please PM me with your approval answering the following questions:

Reason for your application:

Timezone:

Time you could allocate a day (estimate):

What you think you could bring to the team:

I am thinking we'll need about 6 admins to have full coverage around the globe and ensure that everyone will not be burnt out after a week! So even if you see a new admin pop up in the sidebar don't worry, there is always room for more!

Parting words

Thank you for those who have reached out and gave help. Thank you to the other Lemmy admins who helped me, and thank you everyone on Reddthat for still being here and enjoying our time together.

Cheers,

Tiff

tldr

- We have now defederated from our first instance :(

- Signups now are via Application process

- We had 4 new people join our recurring donations last month! 💛

- Merch is in the works. If you have some design skills please submit your designs in the comments!

- Admin recruitment drive

Update: 2023/09/02:

This has now completed. Our host too extra time to migrate everything which caused a longer downtime than expected. (it was not an offline migration rather than a live migration).

If you were around during that time, you would have seen the status page & the incidents: https://status.reddthat.com/incident/254294

Cheers

Our hardware provider had an issue with our underlying host which caused the server to be powered off.

Pictured: Our hardware host becoming aware of the threat.

Unfortunately our server was not automatically powered on afterwards, or I jumped into the control portal too fast for the automatic start to work.

After starting our server we were back up and running.

Total downtime was ~5 minutes.

Next Steps

After a brief chat with our host, they have agreed to live migrate our VPS to another host over the coming week. This may cause a 10-30 second downtime if you even notice it.

Status Page

For all status related information don't forget we have a status page over here: https://status.reddthat.com which if we have anything longer than what we had today will be were I shall keep you all informed.

Thanks all!

Tiff

P.S. Video uploads work if they are small enough, both in length and size. Any video above 10 seconds won't work. This 8 second video is about the maximum our server can sustain currently.

The things that I do while I'm meant to be sleeping is apparently break Reddthat! Sorry folks. Here's a postmortem on the issue.

What happened

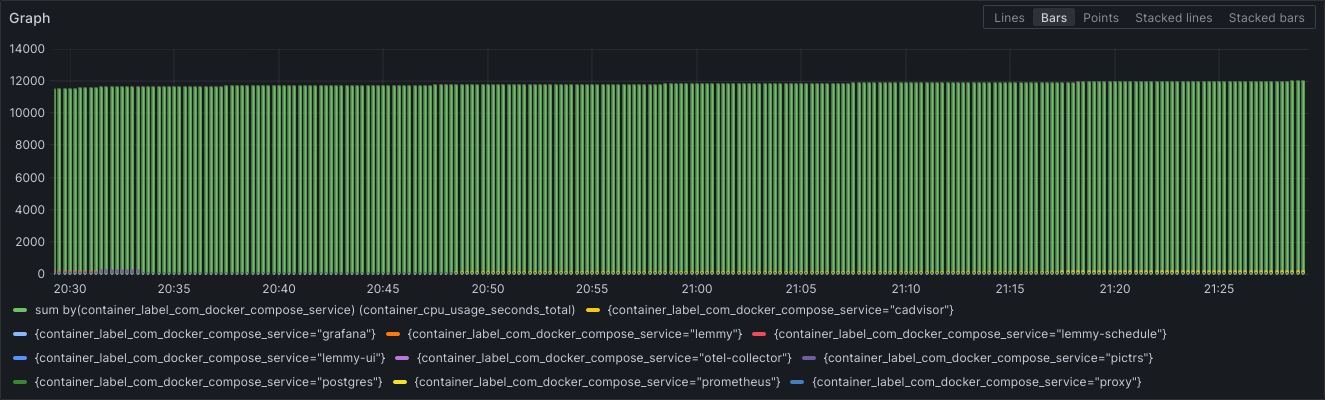

I wanted a way in which I could view the metrics of our lemmy instance. This is a feature you need to turn on called Prometheus Metrics. This isn't a new thing for me, and I tackle these issues at $job daily.

After reading the documentation here on prometheus, it looks like it is a compile time-flag. Meaning the pre-build packages that are generated do not contain metric endpoints.

No worries I thought, I was already building the lemmy application and container successfully as part of getting our video support.

So I built a new container with the correct build flags, turned on my dev server again, deployed, and tested extensively.

We now have interesting metrics for easy diagnosis. Tested posting comments, as well as uploading Images as well as testing out the new Video upload!

So we've done our best and deploy to prod.

ohno.webm

As you know from the other side... it didn't go to well.

After 2 minutes of huge latencies my phone lights up with a billion notifications so I know something isn't working... Initial indications showed super high cpu usage of the Lemmy containers (the one I newly created!) That was the first minor outage / high latencies around 630-7:00 UTC and we "fixed" it by rolling back the version, confirming everything was back to normal. I went and had a bite to eat.

not_again.mp4

Fool-heartedly I attempted it again, with: clearing out build cache, directly building on the server, more testing, more builds, more testing, and more testing.

I opened up 50 terminals and basically DOS'd the (my) dev server with GET and POST requests as an attempt to trigger some form of high enough load that it would cause the testing to be validated and I'd figure out where I had gone wrong in the first place.

Nothing would trigger the issue, so I continued along with my validation and "confirmed everything was working".

Final Issue

So we are deploying to production again but we know it might not go so well so we are doing everything we can to minimise the issues.

At this point we've completely ditched our video upload patch and gone with a completely blank 0.18.4 with the metrics build flag to minimise the possible issues.

NOPE

At this point I accept the downtime and attempt to work on a solution while in fire. (I would have added the everything-is-fine meme but we've already got a few here)

Things we know:

- My lemmy app build is not actioning requests fast enough

- The error relates to a Timeout happening inside lemmy which I assumed was to postgres because there was about ~15 postgres processes in the process of performing "Authentication" (this should be INSTANT)

- postgres logs show an error with concurrency.

- but this error doesnt happen with the dev's packaged app

- postgres isn't picking up the changes to the custom configuration

- was this always the case?!?!?!? are all the Lemmy admins stupid? Or are we all about to have a bad time

- The docker container

postgres:15-alpinenow points to a new SHA! So a new release happened, and it was auto updated. ~9 days ago.

So, i fsck'd with postgres for a bit, and attempted to get it to use our customPostgresql configuration. This was the cause of the complete ~5 minute downtime. I took down everything except postgres to perform a backup because at this point I wasn't too certain what was going to be the cause of the issue.

You can never have too many backups! You don't agree, lets have a civil discussion over espressos in the comments.

I bought postgres back up with the max_connections to what it should have been (it was at the default? 100). And prayed to a deity that would alienate the least amount of people from reddthat :P

To no avail. Even with our postgres sql tuning the lemmy container I build was not performing under load as well as the developers container.

So I pretty much wasted 3 hours of my life, and reverted everything back.

Results

- All lemmy builds are not equal. Idk what the dev's are doing but when you follow the docs one would hope to expect it to work. I'm probably missing something...

- Postgres released a new

15-alpinedocker container 9 days ago, pretty sure no-one noticed, but did it break our custom configuration? or was our custom configuration always busted? (with reference to thelemmy-ansiblerepo here ) - I need a way to replay logs from our production server against our dev environment to generate the correct amount of load to catch these edge cases. (Anyone know of anything?)

At this point I'm unsure of a way forward to create the lemmy containers in a way that will perform the same as the developers ones, so instead of being one of the first instances to be able to upload video content, we might have to settle for video content when it comes. I've been chatting with the pict-rs dev, and I think we are on the same page relating to a path forward. Should be some easy wins coming in the next versions.

Final Finally

I'll be choosing stability from now on.

Tiff

Notes for other lemmy admins / me for testing later: postgres:15-alpine:

2 months ago sha: sha256:696ffaadb338660eea62083440b46d40914b387b29fb1cb6661e060c8f576636

9 days ago sha: sha256:ab8fb914369ebea003697b7d81546c612f3e9026ac78502db79fcfee40a6d049

New UI Alternatives

After looking at our UI for a while I thought, someone will have created something special for Lemmy already. So I opened our development server, told it to get the bag rolling and investigated all the apps people have created.

Have a play around, all should be up and working. If there are other apps, ideas, or ways you think reddthat could be better please let me know!

Enjoy 😃

P.S. External UIs are a security issue

I would like to let everyone know that if you are using an external non-reddthat hosted UI (such as wefwef.app for example) you have given them access to full use of your account.

This happens because Lemmy checks for new notifications by performing GET requests via the api with the cookie in the URL field. https://instance/api/v3/user/unread_count?auth=your-authentication-cookie-here. This URL shows up in the logs of the third-party user interface. So if the third party was nefarious, they could look at their logs and get your cookie. Then they can login to your account or perform any requests.

So please only use the Reddthat user-interfaces as listed here & the main sidebar.

(If you are worried, you can log out of the thirdparty website, which will invalidate your cookie).

Tiff

https://old.reddthat.com

https://alexandrite.reddthat.com

https://photon.reddthat.com

https://voyager.reddthat.com

PS. I really like alexandrite.

Hey Reddthat,

We now have nearly 700 active accounts! I can't believe how amazing that is to me, and a whopping 24 people choosing to put their wallets on the line and donate to the Reddthat cause.

If you feel like contributing monetarily, you can over on our Reddthat OpenCollective here

Maintenance

We have how gotten to the point where our little server is no longer supporting us the best way it can. On July 28th I will turn off reddthat, take a backup and migrate us to the new server.

This new server has double the specs of our little go-getter and costs a whopping AUD$60 per month.

This will allow me to utilise the old server (current) as a development server to test all future updates, and the new server will allow us to keep our post times low, and injecting meme into our eyeballs as fast as possible.

Thanks

Thankyou all for choosing Reddthat as your federation home and a special thankyou to those who have created communities on Reddthat as well.

Tiff

Maintenance Complete!

We have now successfully migrated to our new server!

Everything went smoothly and no issues were encountered.

The longest issue was related around restoring the database as I forgot --jobs=4, so it was only restoring via one thread instead of multiple.

o/

Unfortunately we had an issue updating to the latest version of Lemmy. This caused database corruption and we had to roll back to a backup that was unfortunately from 2 days ago!

An abundance of caution in the wrong error resulted in me wanting to push this change out to help with the exploit relating to custom emoji. As other instances were attacked, I didn't want reddthat to fall to the same issue.

I am so sorry !

I have since updated our deployment scripts to automatically take a back up before all updates, as well as investigating why our cron backups didn't work as we expected them too.

We also lost all new accounts that were created over the past 2 days, all new posts, and all subscriptions. I'm very sorry about that and hope that you will reregister with those account details again, repost the memes and pictures that make our server great.

The good news is that we have updated to the latest version of Lemmy and everything is back to "normal".

Fixing Deployments

I'm going to use some of our money for a dedicated development server as our current deployment testing is clearly lacking.

Unfortunately that will have to wait until the weekend when I have some extra time to put into developing these new processes.

Tiff

Finally a user interface that fills your whole browser! As well as other bug fixes and federation fixes as well.

They also believe they might have fixed the "Hot" issues, where you randomly get super old posts in your feeds for no reason.

Yes, we were also down for ~10 minutes while I performed a backup and updated the servers as well.

Our new server has been setup and the database migration will look to happen during this week.

Cheers, Tiff

Hello Reddthatians, Reddthians, reddies? 🤷 Everyone!

If you were lucky enough to be browsing the site at around 10am UTC you might have seen a down message!

Fear not, we are now back and faster than ever. We are speed.

Images

Using Backblaze was an unfortunate mistake but one that allowed us to migrate to another object store without any issues!

We are now using Wasabi Object Storage for USD$7/month. (Which is slightly higher than the previous budgeted USD$60/year but the benefits outweigh the costs. Instead of the 180ms responses from Australia to eastern USA, we now have 0.5ms responses from Wasabi!

"You've lost me Tiff, what does this all mean?"

Well my good friend, it means picture uploads and responses are so fast you should be seeing next to 0 of those pesky red JSON popups while uploading pictures. ^[0]^

Scaling Out

We have successfully scaled out our frontend and backend applications, Lemmy-ui & Lemmy respectively. We are successfully using 2 independent applications to process traffic. This allows for Lemmy to process items concurrently. This also allows us to reduce the downtimes when pushing updates even lower. As the services will now bring 1 of the applications down, perform the updates, bring it back up and then do the same on the other ones.

Now that we have successfully tested scaling on 1 server it is time to purchase more. Which leads me into the database.

Database performance

Currently, our database performance is sub-optimal, and the reason that some of the issues with viewing content arise. Lemmy has scheduled tasks which run so it can pre-populate what is "Hot". It has to perform this query on all the posts & comments it knows about. I've been seeing deadlocks where queries start "waiting" for other queries to finish. This is especially unfortunate as this results in maxing out our underlying storage. Our little server cannot run all 3 at the same time unfortunately.

Thus the need for scaling out.

Purchase plan and Endpoint Migration

Option 1:

Purchase this beefy completely dedicated instance so we can run our database, image service, as well as multiple backend & frontend services. This will be in conjunction to running more backends & frontend on our current server. This will give us a tremendous amount of processing power, and will possibly allow for video uploads as well! ^[1]^

- $120.00AUD Monthly

- E3-1230v6 (4x 3.5 GHz Core)

- 32GB RAM

- 2x 480GB SSD

- 10TB data

(If we wanted a NVMe option, it would be $170AUD/month)

Option 2:

Purchase another VPS with our current provider and dedicate that instance to purly database related items. This would free up all the compute power to run our apps.

- $44.00AUD Monthly

- 4 Core CPU

- 16GB RAM

- 160 GB NVMe

- 8TB data

Either option I think is valid except it comes down to pricing. Our wonderful donators have currently provided us with ... checks open collective ... $557.28 AUD to work with. Which is an amazing feat! With $27 in monthly donations.

Monthly Costs:

- Object storage: $7.00USD/m (About $10.50AUD)

- Ram Upgrade: $4.50AUD/m Total Monthly Costs: $15.00AUD

So, a quick back of the math calculation will give us:

- Option 1: ~5 months before we have problems

- Option 2: ~17 months before we have problems

At this time I think a dedicated server is premature and will put a strain on a budget, knowing that in 5 months we will have a budgeting issue.

Once we have more monthly donators we can revisit purchasing a dedicated server.

I'll be monitoring server performance with the new multi-frontend/backend system over the coming days, while everyone reads this post. Then probably on the 8th/9th of July (this weekend), I will purchase the new server for a 1 month trial and start setting it up, securing it and validating our proposed system.

Thank you all for being here again! I'm loving all the new communities, new display pictures & hilarious usernames. I cannot wait to see what our little community is going to become.

Cheers,

Tiff

Notes

[0] - Technically there is a 10 second hard limit between Lemmy and pictrs. So the video needs to be uploaded and possible converted into a viable format within 10 seconds. This is also why if your internet is slow you will get the error popups more frequently than others.

[1] - With the dedicated server, we could convert/process videos faster allowing us to possibly get under that 10second timeout. But I do not think it would still be able to be done. Lemmy will need a different way of handling video uploads. Where we tell the users that it is processing. Just like every other video upload site.

[2] - This dedicated server is a similar size to what lemmy.world is running and they have over 7x the active users we have! Probably overkill in the end.

This is terribly hard to write. If you flushed your cache right now you would see all the newest posts without images. These are now 404s, even thought the images exist. In 2 hours everyone will see this. Unfortunately there is no going back, recovering the key store for all the "new" images.

What happened?

After the picture migration from our local file store to our object storage, i made a configuration change so that our Docker container no longer reference the internal file store. This resulted in the picture service having an internal database that was completely empty and started from scratch 😔

What makes this worse is that this was inside the ephemeral container. When the containers are recreated that data is lost. This had happened multiple times over the 2 day period.

What made this harder to debug was our CDN caching was hiding the issues, as we had a long cache time to reduce the load on our server.

The good news is that after you read this post, every picture will be correctly uploaded and added to the internal picture service database! 😊 The "better" news is the all original images from the 28th of June and before will start working again instantly.

Timeframe

The issue existed from the period from 29th of June to 1st of July.

Resolution

Right now. 1st of July 8:48 am UTC.

From now on, everything will work as expected.

Going forward

Our picture service migration has been fraught with issues and I cannot express how annoyed and disheartened by the accidents that have occurred. I am yet to have provided a service that I would be happy with.

I am very sorry that this happened and I will strive to do better! I hope you all can accept this apology

Tiff

Welcome to everyone joining up so far!

Housekeeping & Rules

Reddthat's rules are defined here (& are available from the side bar as well). When in doubt check the sidebar on any community!

Our rules can be summed up with 4 points, but please make sure you do read the rules.

- Remember that we are all humans

- Don’t be overtly aggressive towards anyone

- Try and share ideas, thoughts and criticisms in a constructive way

- Tag any NSFW posts as such

I'm lost and completely new to the fediverse!

That's fine, we were all there too! Our fediverse friends created a starting guide here which can be read to better understand how everything works in a general sense.

As part of the fediverse you can follow and block any community you want to as well as follow and interact with any person on any other instance. That means even if you did not choose Reddthat as your home server, you still could have interacted with us regardless!

So I'd like to say thanks for picking our server to come and experience the fediverse on.

Reddthat "Official" Communities:

- Announcements: !reddthat@reddthat.com (Also where you are reading this now!)

- Support: !reddthatsupport@reddthat.com

- Community !community@reddthat.com (for any & all topics)

Other Communities

Communities can be viewed by clicking the Communities section at the top (or this link). You will be greeted by 3 options. Subscription, Local, All.

They are pretty self explanatory, but for a quick definition:

- Subscription: Communities that you personally are subscribed too (can exist anywhere on the fediverse, on any instance)

- Local: Communities that were created on Reddthat

- All: Every community that Reddthat knows about

All is not every community on the whole fediverse, all is only the ones we know about. Also known as "federated" against.

There is the fediverse browser which attempts to list every community on the fediverse.

Just because a community exists elsewhere, doesn't mean that you have to join it. You can create your own! We welcome you to create any community on Reddthat that your heart desires.

Funding & Future

How we pay for all the services is explained in our main funding and donation post. We are 100% community funded, and completely open about our bills and expenses.

The post may need some updating over the course of the next day or two as we deal with an increased user base.

If you enjoy it here and want to help us keep the lights on and improve our services. Please help us out. Any amount of dollarydoos is welcome!

Good luck on your adventures and welcome to Reddthat.

Cheers,

Tiff

These were the signup stats for the Reddit Exodus:

Our signups have gone 🚀 through the roof! From 1-2 per hour to touching on 90! Tell your friends about reddthat, and let's break 100!

2 18:00

18 19:00

7 20:00

20 21:00

27 22:00

76 23:00

90 00:00

84 01:00

62 02:00

47 03:00

32 04:00

28 05:00

44 06:00

43 07:00

43 08:00

38 09:00

5 10:00

42 11:00

53 12:00

49 13:00

48 14:00

50 15:00

16 16:00

24 17:00

62 18:00

40 19:00

41 20:00

20 21:00

16 22:00

18 23:00

Total: 1145 !

So for those of you who were refreshing the page and looking at our wonderful maintenance page it took way longer than we planned! A full write up I'll do after I've dealt with a couple time out issues.

Here is a bonus meme.

So? How'd it go...

Exactly how we wanted it to go... except with a HUGE timeframe.

As part of the initial testing with object storage I tested using a backup of our files. I validated that the files were synced, and that our image service could retrieve them while on the object store.

What I did not account for was the latency to backblaze from Australia, how our image service handled migrations, and the response times from backblaze.

- au-east -> us-west is about 150 to 160ms.

- the image service was single threaded

- response times to adding files are around 700ms to 1500ms (inclusive of latency)

We had 43000 files totaling ~15GB of data relating to images. If each response time is 1.5 seconds per image, and we are only operating on one image at a time, yep, that is a best case scenario of 43000 seconds or just under 12 of transfer time at an average of 1s per image.

The total migration took around 19 hours as seen by our pretty transfer graph:

So, not good, but we are okay now?

That was the final migration we will need to do for the foreseeable future. We have enough storage to last over 1 year of current database growth, with the option to purchase more storage on a yearly basis.

I would really like to purchase a dedicated server before that happens and if we continue having more and more amazing people join our monthly donations on our Reddthat open collective, I believe that can happen.

Closing thoughts

I would like to take this opportunity to apologise for this miscalculation of downtime as well as not fully understanding the operational requirements on our usage of object storage.

I may have also been quite vocal on the Lemmy Admin matrix channel regarding the lack of a multi-threaded option for our image service. I hope my sleep deprived ramblings were coherent enough to not rub anyone the wrong way.

A big final thank you to everyone who is still here, posting, commenting and enjoying our little community. Seeing our community thrive gives me great hope for our future.

As always. Cheers,

Tiff

PS.

Our bot defence in our last post was unfortunately not acting as we hoped it would and it didn't protect us from a bot wave. So I've turned registration applications back on for the moment.

PPS. I see the people on reddit talking about Reddthat. You rockstars!

Edit:

Instability and occasional timeouts

There seems to be a memory leak with Lemmy v0.18 and v0.18.1 which some other admins have reported as well and has since been plaguing us. Our server would be completely running fine, and then BAM, we'd be using more memory than available and Lemmy would restart. These would have lasted about 5-15 seconds, and if you saw it would have meant super long page loads, or your mobile client saying "network error".

Temporary Solution: Buy more RAM.

We now have double the amount of memory courtesy of our open collective contributors, and our friendly VPS host.

In the time I have been making this edit I have already seen it survive a memory spike, without crashing. So I'd count that as a win!

Picture Issues

This leaves us with the picture issues. It seems the picture migration had an error. A few of the pictures never made it across or the internal database was corrupted! Unfortunately there is no going back and the images... were lost or in limbo.

If you see something like below make sure you let the community/user know:

Also if you have uploaded a profile picture or background you can check to make sure it is still there!

<3 Tiff

Hello Reddthat! It is I, the person at the top of your feed.

First off. Welcome all new users! Thank you for signing up and joining our Reddthat community.

Bot Defence

Starting yesterday after going through 90+ registration applications, I couldn't do it anymore. I felt compelled to give people a great experience with instantly getting let in and kept my phone on me for over 24 hours, checking every notification to see if that was another registration application I needed to quickly click accept on.

I want to quickly say thank you to the people who obviously read all the information and for those that didn't I'm keeping a close eye on you... 😛

I found a better solution to our signup problems.

As we use cloudflare for our CDN I have turned on their security system for the signup page. ~~Now when anyone goes to the signup page, they will be given a challenge that needs to be solved.

That means any bots that cannot pass cloudflare's automated challenge cannot signup.

A win until we get our captcha back working. ~~

Well I did not check the signup process correctly. It doesn't act as I thought it would, so I'll disable it after the migration.

Downtime / Migration to object storage

Today in the fediverse we have successfully confirmed that object storage will be an acceptable path forward but will not operate as initially hoped.

I initially hoped to offload everything via our CDN, but the data still needs to go through our app server. The silver lining is that we can still cache it heavily on our CDN to ensure that the pictures will be served fast as possible for you.

So it may be slightly pricier than we initially planned for when moving to object storage, but in the end we still benefit, functionally and monetarily. The reason is we were not going to be billed for egress (fetching/displaying images), where as now we will be. The fees are very low and still should be covered by our wonderful monthly donators.

We will have about 15-20GB of storage that needs to be moved and unfortunately our image service is incapable of running at the same time the migration is done, which means we need to turn it off while the migration happens. To top it all off we have... 43000+ (and counting) small image files. If you haven't worked with large swarms of small images before, the one that I can tell you is that transferring small images, sucks.

So we can do two things:

1. Turn off everything

- Dedicate all CPU and bandwidth to the migration

- Ensuring continuity and reducing the risk of something going wrong

2. Turn off the picture service

We can run Reddthat without the picture service & uploads while we perform the migration, but the migration will have an impact in server performance.

- This will amount to having any picture we host (that isn't cached) return a 404.

- Any uploads will timeout during that period, and return an error popup.

- Pages will be slightly slower to respond.

- Something else might break 🤷

Because of the risks associated with running only half our services, I've decided to continue with our planned downtime and go with option 1, turning off everything while we perform the migration.

Date: 28th June (UTC)

- Start Time: 0:05

- End Time: 6:00 (Expected)

It will probably take the whole 6 hours. In our testing, it did 150 items in 10 minutes... I will put up a maintenance page and will keep you all updated during that time frame especially if it is going to take longer, but unfortunately it will take however long it takes.

This will be the last announcement until we do the migration.

Cheers,

Tiff

PS. Like what we are doing? Become a contributor on our Open Collective to help finance these changes!

Hello Everyone!

Lets start with the good, we recently just hit over 700 accounts registered! Hello! I hope you are doing well on your fediverse journey!

We have also hit...

(15 when I posted this) total individual contributors! Every time I see an email saying we have another contributor it makes me feel all warm and fuzzy inside! The simple fact that together we are making something special here really touches my soul.

If you feel like joining us and keeping the server online and filled to the brim with coffee, you can see our open collective here: Reddthat Open Collective.

0.18 (& Downtime?)

As I have said in my community post (over here!), I wanted to wait for 0.18.1 to come out so I did not have to fight off a wave of bots because there is no longer a captcha, & I didn't want to enable registration applications because that just ruins the whole on-boarding experience in my opinion.

So, where does that leave us?

I say, screw those bots! We are going to use 0.18.0 and we are going to rocket our way into 0.18.1 ASAP. 🚀

and.... it's already deployed! Enjoy the new UI and the lack of annoyances on the Jerboa application!

If you are getting any weird UI artifacts, hold Control (or command) and press the refresh button, as it is a browser cache issue.

~~I'm going to keep the signup process the same but monitor it to the point of helicopter parenting. So if we get hit by bots we'll have to turn on the Registration Application which I'm really hoping we won't have to. So anyone out there... lets just be friends okay?~~

Well... it looks like we cannot be friends! Application Registration is now turned on. Sorry everyone. I guess we can't have nice things!

Weren't we going to wait?

Moving to 0.18 was actually forced by me (Tiff) as I upgraded the Lemmy app running on our production server from 0.17.4 to 0.18.0. This updated caused the migrations to be performed against the database. The previous backup I had at the time of the unplanned upgrade was from about an hour before that. So rolling the database back was certainly not a viable option as I didn't want to lose an hour worth of your hard typed comments & posts.

The mistake or "root cause" was caused by an environmental variable that was set in my deploy scripts. I utilise environment variables in our deployments to ensure deployments can be replicated across our "dev" server (my local machine) and our "prod" server (the one you are reading this post on now!).

This has been fixed up and I'm very sorry for pushing the latest version when I said we were going to wait. I am also going to investigate how we can better achieve a rollback for the database migrations if we need to in the future.

Pictures (& Videos)

The reason I was testing our deployments was to fix our pictures service (called pictrs). As I've said before (in a non-announcement way) we are slowly using more and more space on our server to store all your fancy pictures, as well as all the pictures that we federate against. If we want to ensure stability and future expansion we need to migrate our pictures from sitting on the same server to an object storage. The latest version of pictrs now has that capability, and it also has the capability of hosting videos!

Now before you go and start uploading videos there are limits! we decided to limit videos to 400 frames, which is about 15 seconds worth of video. This was due to video file-sizes being huge compared pictures as well as the associated bandwidth that comes with video content sharing. There is a reason there are not hundreds of video sharing sites.

Object Storage Migration

I would like to thank the 5 people who have donated on a monthly recurring basis because without knowing there is a constant income stream using a CDN and Object storage would not be feasible.

Over the next week I will test the migration from our filesystem to a couple object storage hosting companies and ensure they can scale up with us. Backblaze being our first choice.

Maintenance Window

- Date: 28th of June

- Start Time: 00:05 UTC

- End Time: 02:00 UTC

- Expected Downtime: the full 2 hours!

If all goes well with our testing, I plan to perform the migration on the 28th of July around 00:05 UTC. We currently have just under 15GB of images so I expect it to take at maximum 1 hour, with the actual downtime closer to 30-40 minutes, but knowing my luck it will be the whole hour.

Closing

Make sure you follow the !community@reddthat.com for any extra non-official-official posts, or just want to talk about what you've been doing on your weekend!

Something I cannot say enough is thank you all for choosing Reddthat for your fediverse journey!

I hope you had a pleasant weekend, and here's to another great week!

Thanks all!

Tiff

After updating to the new pictrs container, all image uploads are (randomly?) failing.

~~We are looking into it.~~

https://github.com/LemmyNet/lemmy-ansible/pull/90 broke it as they updated the pictrs container from 0.3.1 to 0.4.0-rc.7

The 0.4.0-rc.7 intermittently worked for small images, and even videos! (which is the next change as part of the 0.4.x version that will be coming out.)

I've rolled back to v0.3.3 and its back and working, See the comments for a gif, that gets converted to a mp4, and a jpg, and a png.

Enjoy your weekends everyone!

Hello! It seems you have made it to our donation post.

Thankyou

We created Reddthat for the purposes of creating a community, that can be run by the community. We did not want it to be something that is moderated, administered and funded by 1 or 2 people.

Current Recurring Donators:

Current Total Amazing People:

Background

In one of very first posts titled "Welcome one and all" we talked about what our short term and long term goals are.

In 7 days since starting, we have already federated with over 700 difference instances, have 24 different communities and over 250 users that have contributed over 550 comments. So I think we've definitely achieved our short term goals, and I thought this was going to take closer to 3 months to get these types of numbers!

We would like to first off thank everyone for being here, subscribing to our communities and calling Reddthat home!

Funding

Open Collective is a service which allows us to take donations, be completely transparent when it comes to expenses and our budget, and allows us some form of idea of how we tracking month-to-month.

Servers are not free, databases are growing, images are uploading and the servers are running smoothly.

This post has been edited to only include relevant information about total funds and "future" plans. Because sometimes, we reach the future we were planning for!

Current Plans:

Database

The database service is another story. The database has grown to 1.8GB (on the file system) in the 7 days. Some quick math, makes that 94GB in 1 year's time. Our current host allows us to addon 100GB of SSD storage for $100/year, which is very viable and will allow us to keep costs down, while planning for our future.

Annual Costings:

Our current costs are

- Domain: 15 Euro

- Server: $118.80 Aud

- Server Ram Upgrade: $54.50 Aud

- Server: $528.00 Aud

- Wasabi Object Storage: $72 Usd

- Total: ~$830 Aud per year (~$70/month)

That's our goal. That is the number we need to achieve in funding to keep us going for another year.

Cheers

Tiff

PS. Thank you to our donators! Your names will forever be remembered by me: Last updated on 2023-10-03

Recurring Gods (🌟)

- Nankeru (🌟)

- pleasestopasking (🌟)

- souperk (🌟)

- Incognito (x2 🌟🌟)

- Siliyon (🌟)

- Ed (🌟)

- ThiccBathtub (🌟)

- VerbalQuicksand (🌟)

- hexed (🌟)

- Bryan (🌟)

- Daniel (🌟)

- Patrick (🌟)

Once Off Heroes

- Guest(s) x12

- Stimmed

- MrShankles

- RIPSync

- Alexander

- muffin

- MentallyExhausted

- Dave_r

The following rules governs reddthat.com and all communities hosted on reddthat

Ground Rules

We are a NSFW enabled instance, which means there may be NSFW content in the Local and All categories if you leave NSFW enabled in your account. If you do not wish to view NSFW content you are still welcome here but make sure you toggle off Show NSFW content under your Settings

Another option is to select "Block Community" from the sidebar, when on the specific community. Once you have done that you will never see it again!

Our Instance Rules

- General Code of Conduct

- Any NSFW post must be tagged as NSFW. Failure to do so will be given one warning only

- Anything that you wouldn't want your boss or coworkers to see, needs to be tagged NSFW

- NSFW also acts as "Content Warning" outside of the specific NSFW communities

- Remember the human! (no harassment, threats, etc.)

- No racism or other discrimination

- No endorsement of hate speech

- No self-advertisements or spam

- No link-spamming

- Recommended 5 links per community per day unless noted in Community Rules

- No content against Australian Law

Any posts or comments that are in breach of these rules will be dealt with, and remediation will occur. Whether that be a warning, temporary ban, or permanent ban.

(TLDR) The crux of it boils down to:

- Remember that we are all humans

- Don’t be overtly aggressive towards anyone

- Try and share ideas, thoughts and criticisms in a constructive way

- Tag any NSFW posts as such

Moderation

We agree with the Code of Conduct for Moderation and will due our best to uphold it.

Lemmy provides the Moderation Log for everyone to see as well. This is a federated log where actions taken by other moderators and other instance admins are shown. As such the posts that exist in the log come with a content warning

You can see the modlog in the bottom of the sidebar. Here is the link if you wish to see it.

References

As both links above reference a domain outside of our control (join-lemmy). Here is the git commit and the wayback link:

Feedback

If you take issue with these rules, or think a rule should be modified, please add a comment on this post with answers to the following as well as any extra information you can think of:

- What rule would you like to change?

- Why you would like to change it?

- What are the benefits to this rule change?

- Have there been any issues and can you reference them?

Thank you for your continued support.

- Tiff

Transparency log:

2023-08-19 - Changed Maximum Links posted in community from 2 to 5 per day.

2023-06-13 - Added "Ground Rules" section, Added Feedback section, `Instance Rules` -> `Our Instance Rules`.

2023-06-11 - Post Creation

Thank you all for letting me wake-up this morning to find even more future friends have joined our little community. At last count it was only 30 users now we have over 80!

Since the last update we've been approved for our OpenCollective initiative. Where you lovely users can ensure that reddthat stays online for as long as the funding allows. All of our funding goals are currently there as well as our stretch goal of paying our moderation and administration team for their hard work.

We've reached another milestone in that we have now federated with over 310 different instances. That means there 310 different other servers out there that we are connecting too and sharing posts with!

Thank you all, and remember the human!

Tiff

So. You used to reddit, now you've reddthat.

Welcome!

Please feel free to explore some of our communities, and make sure you explore other instances communities as well!

We are community funded via OpenCollective & Liberapay currently.

Short-term Goals: (3-6m)

- Gather some like minded people to have fun with and share intellectual posts, and silly memes

- Federate with as many of the great communities as we can

Long-term Goals: (6-12m)

- Make progress to 50 or 100% funding goal

- Create a new skin to make reddthat unique

- Integrate a "Supporter" badge for all supporters

For anyone joining up to this instance <3 For anyone federating with this instance <3 Together we can make a great community, run by the community, for our community.

](

]( ](

](