Why do I still have to work my boring job while AI gets to create art and look at boobs?

Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz

Memes

Miscellaneous

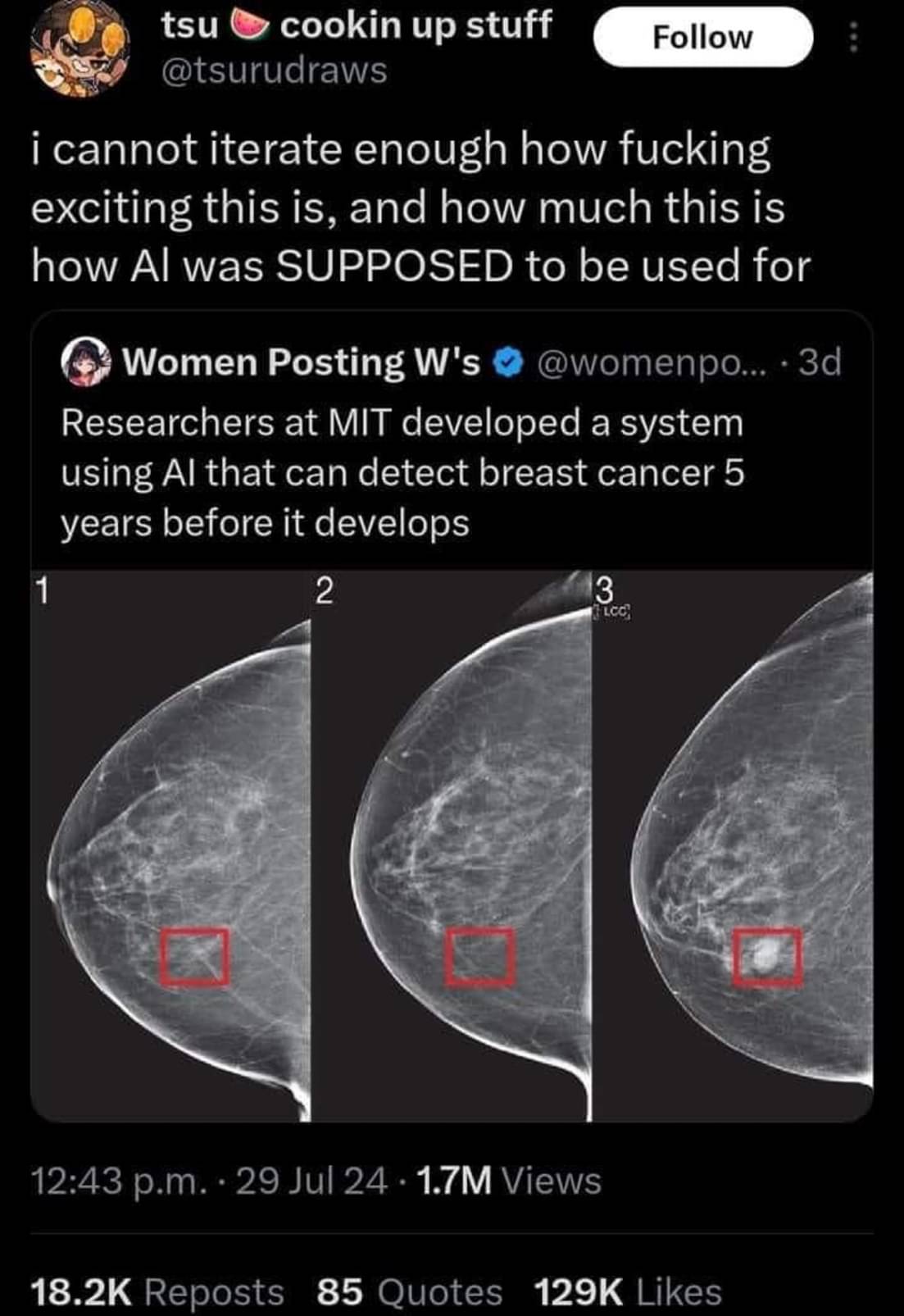

Now make mammograms not $500 and not have a 6 month waiting time and make them available for women under 40. Then this'll be a useful breakthrough

Done.

Unfortunately AI models like this one often never make it to the clinic. The model could be impressive enough to identify 100% of cases that will develop breast cancer. However if it has a false positive rate of say 5% it’s use may actually create more harm than it intends to prevent.

Another big thing to note, we recently had a different but VERY similar headline about finding typhoid early and was able to point it out more accurately than doctors could.

But when they examined the AI to see what it was doing, it turns out that it was weighing the specs of the machine being used to do the scan... An older machine means the area was likely poorer and therefore more likely to have typhoid. The AI wasn't pointing out if someone had Typhoid it was just telling you if they were in a rich area or not.

That's actually really smart. But that info wasn't given to doctors examining the scan, so it's not a fair comparison. It's a valid diagnostic technique to focus on the particular problems in the local area.

"When you hear hoofbeats, think horses not zebras" (outside of Africa)

That is quite a statement that it still had a better detection rate than doctors.

What is more important, save life or not offend people?

The thing is tho... It has a better detection rate ON THE SAMPLES THEY HAD but because it wasn't actually detecting anything other than wealth there was no way for them to trust it would stay accurate.

Citation needed.

Usually detection rates are given on a new set of samples, on the samples they used for training detection rate would be 100% by definition.

Right, there's typically separate "training" and "validation" sets for a model to train, validate, and iterate on, and then a totally separate "test" dataset that measures how effective the model is on similar data that it wasn't trained on.

If the model gets good results on the validation dataset but less good on the test dataset, that typically means that it's "over fit". Essentially the model started memorizing frivolous details specific to the validation set that while they do improve evaluation results on that specific dataset, they do nothing or even hurt the results for the testing and other datasets that weren't a part of training. Basically, the model failed to abstract what it's supposed to detect, only managing good results in validation through brute memorization.

I'm not sure if that's quite what's happening in maven's description though. If it's real my initial thoughts are an unrepresentative dataset + failing to reach high accuracy to begin with. I buy that there's a correlation between machine specs and positive cases, but I'm sure it's not a perfect correlation. Like maven said, old areas get new machines sometimes. If the models accuracy was never high to begin with, that correlation may just be the models best guess. Even though I'm sure that it would always take machine specs into account as long as they're part of the dataset, if actual symptoms correlate more strongly to positive diagnoses than machine specs do, then I'd expect the model to evaluate primarily on symptoms, and thus be more accurate. Sorry this got longer than I wanted

It's no problem to have a longer description if you want to get nuance. I think that's a good description and fair assumptions. Reality is rarely as black and white as reddit/lemmy wants it to be.

What if one of those lower economic areas decides that the machine is too old and they need to replace it with a brand new one? Now every single case is a false negative because of how highly that was rated in the system.

The data they had collected followed that trend but there is no way to think that it'll last forever or remain consistent because it isn't about the person it's just about class.

The goalpost has been moved so far I now need binoculars to see it now

Not at all, in this case.

A false positive of even 50% can mean telling the patient "they are at a higher risk of developing breast cancer and should get screened every 6 months instead of every year for the next 5 years".

Keep in mind that women have about a 12% chance of getting breast cancer at some point in their lives. During the highest risk years its a 2 percent chamce per year, so a machine with a 50% false positive for a 5 year prediction would still only be telling like 15% of women to be screened more often.

Ok, I'll concede. Finally a good use for AI. Fuck cancer.

I can do that too, but my rate of success is very low

pretty sure iterate is the wrong word choice there

That's not the only issue with the English-esque writing.

100% true, just the first thing that stuck out at me

I suppose they just dropped the "re" off of "reiterate" since they're saying it for the first time.

Dude needs to use AI to fix his fucking grammar.

Btw, my dentist used AI to identify potential problems in a radiograph. The result was pretty impressive. Have to get a filling tho.

https://youtube.com/shorts/xIMlJUwB1m8?si=zH6eF5xZ5Xoz_zsz

Detecting is not enough to be useful.

The test is 90% accurate, thats still pretty useful. Especially if you are simply putting people into a high risk group that needs to be more closely monitored.

“90% accurate” is a non-statement. It’s like you haven’t even watched the video you respond to. Also, where the hell did you pull that number from?

How specific is it and how sensitive is it is what matters. And if Mirai in https://www.science.org/doi/10.1126/scitranslmed.aba4373 is the same model that the tweet mentions, then neither its specificity nor sensitivity reach 90%. And considering that the image in the tweet is trackable to a publication in the same year (https://news.mit.edu/2021/robust-artificial-intelligence-tools-predict-future-cancer-0128), I’m fairly sure that it’s the same Mirai.

Also, where the hell did you pull that number from?

Well, you can just do the math yourself, it's pretty straight-forward.

However, more to the point, it's taken right from around 38 seconds into the video. Kind of funny to be accused of "not watching the video" by someone who is implying the number was pulled from nowhere, when it's right in the video.

I certainly don't think this closes the book on anything, but I'm responding to your claim that it's not useful. If this is a cheap and easy test, it's a great screening tool putting people into groups of low risk/high risk for which further, maybe more expensive/specific/sensitive, tests can be done. Especially if it can do this early.

I had a housemate a couple of years ago who had a side job where she'd look through a load of these and confirm which were accurate. She didn't say it was AI though.

I really wouldn't call this AI. It is more or less an inage identification system that relies on machine learning.

Nooooooo you're supposed to use AI for good things and not to use it to generate meme images.

I think you mean mammary images?

Haha I love Gell-Mann amnesia. A few weeks ago there was news about speeding up the internet to gazillion bytes per nanosecond and it turned out to be fake.

Now this thing is all over the internet and everyone believes it.

Good news, but it's not "AI". Please stop calling it that.

it's ai, it all is. the code that controls where the creepers in Minecraft go? AI. the tiny little neural network that can detect numbers? also AI! is it AGI? no. it's still AI. it's not that modern tech is stealing the term ai, scifi movies are the ones that started misusing it and cash grab startups are riding the hypetrain.